Podium delivers multi-language AI voice support at scale

- Location

- Lehi, Utah

- Industry

- Customer Engagement

- Funding

- Series D ($3B+ valuation)

Use Cases:

- •Multi-language voice agent testing

- •Load testing and performance validation

- •Real-time monitoring and analytics

- •Accent and dialect coverage

Meet Podium

Podium is a customer engagement platform that helps businesses manage conversations across messaging, voice, and reviews.

With Hamming, Podium was able to

The Challenge: Scaling Voice Agent Quality Across Languages and Markets

Podium's voice agents needed to work reliably across multiple languages, accents, and regional phrasing differences. Manual testing couldn't capture these nuances at scale.

Each language required careful validation, but relying on manual reviews and native-speaker testing for every new feature was not sustainable.

Peak traffic periods also exposed issues that were hard to reproduce with manual, low-volume testing alone.

The Multi-Language Testing Problem

Language coverage gaps: Manual testing couldn't keep up with multiple languages and regional variations

Slow validation cycles: Each new capability required repeated manual testing across languages and accents

Peak traffic blind spots: Issues appeared under load conditions that were hard to reproduce manually

Regional nuance risk: Subtle phrasing differences went undetected without scaled testing

The Impact

| Metric | Result |

|---|---|

| Languages tested with accent variations | 8+ |

| Test scenarios executed monthly | 5,000+ |

| Reduction in manual testing time | 90% |

| Concurrent calls simulated | 1,000+ |

Before and After Hamming

How Hamming Solved Multi-Language Voice Agent Testing

Multi-Language Test Scenario Creation

Hamming enabled Podium to create comprehensive test scenarios across their supported languages. The platform helps cover regional phrasing differences, formality, and expectations without relying on purely manual testing.

These scenarios include variations in tone, intent, and customer context so the team can validate how agents respond across markets.

Learn more: Multi-Language Support for Voice AI Testing →

Load Testing and Performance Validation

Podium's voice agents handle traffic spikes during business hours, launches, and seasonal peaks. Hamming's load testing simulates 1,000+ concurrent calls across different languages, measuring response times, accuracy, and system stability under realistic conditions.

Testing under load helped surface performance issues before they reached production.

Learn more: Guide to AI Voice Agent Quality Assurance →

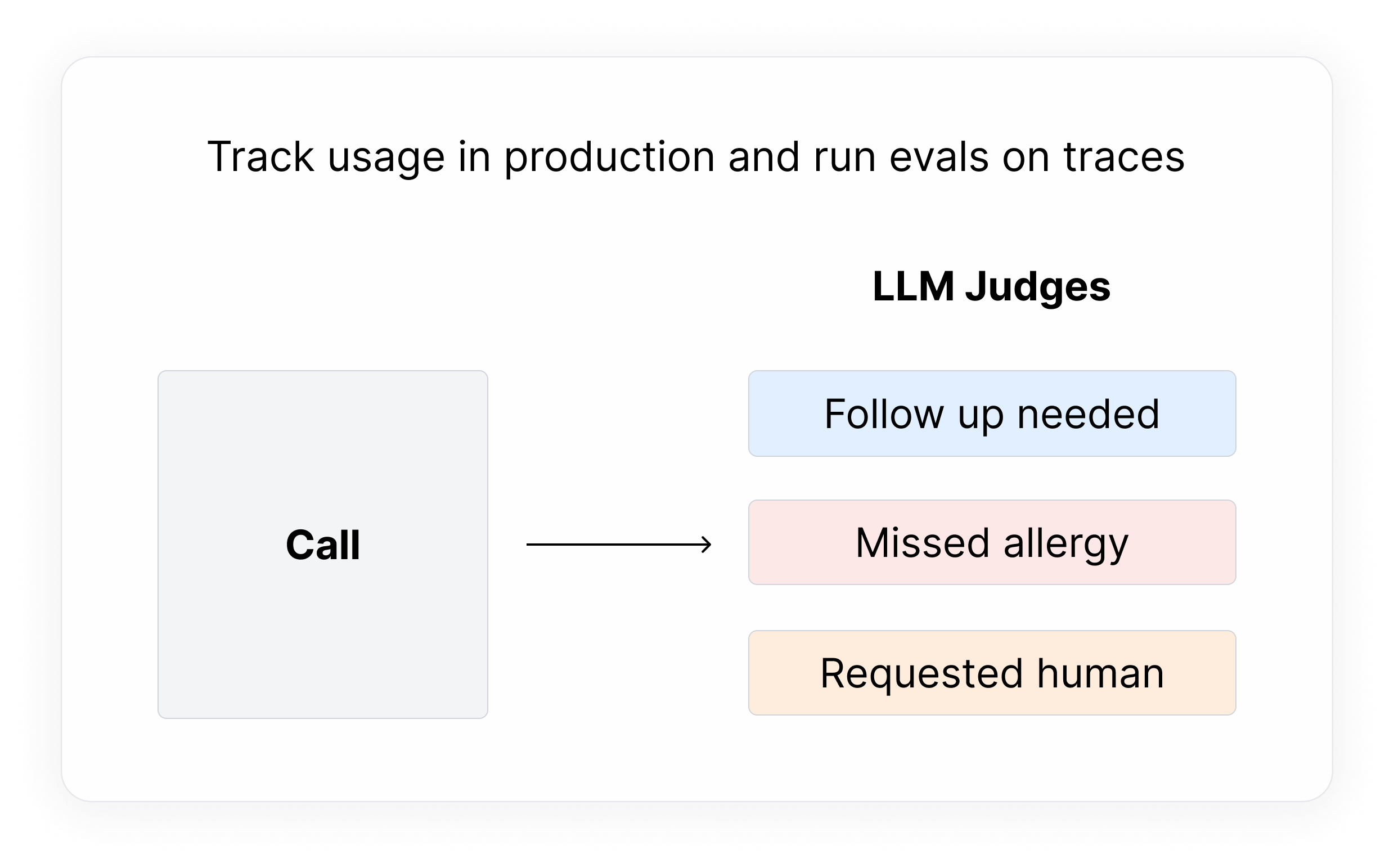

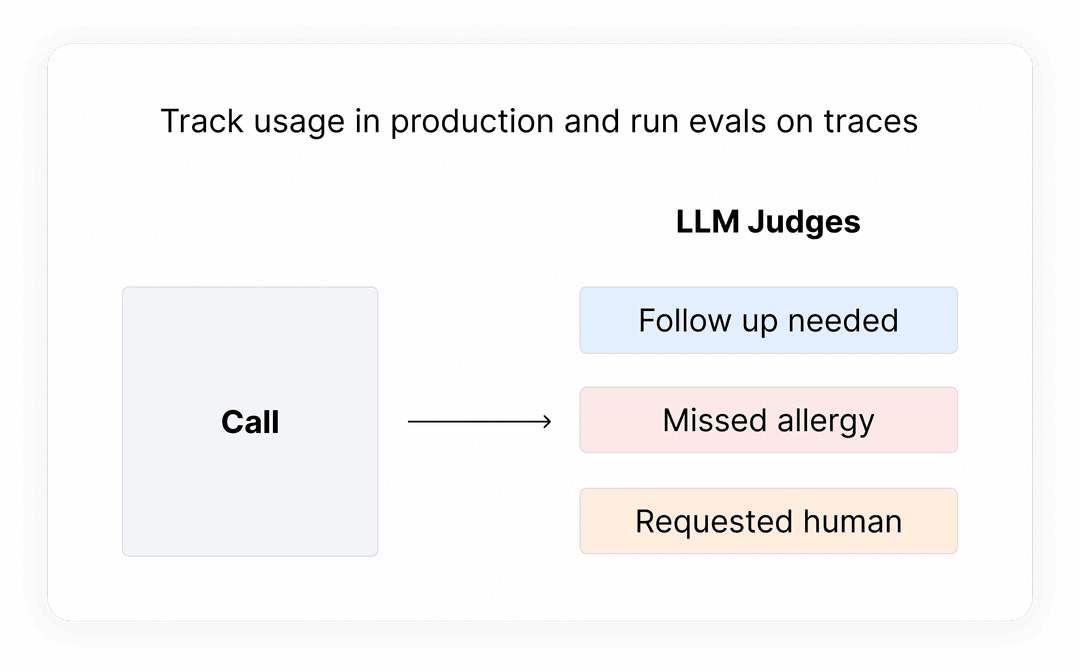

Continuous Monitoring and Optimization

Hamming's real-time monitoring tracks performance metrics across all languages and identifies issues before they impact customer experience. The platform surfaces trends like increasing response latency in specific languages or declining accuracy for certain query types.

When Podium updated their French-language model, monitoring detected a 15% drop in intent recognition for appointment-related queries within hours. The team rolled back the change and investigated, avoiding days of degraded service for French-speaking customers.

Accent and Dialect Testing

Beyond language, Podium tests how their agents handle diverse accents within each language. English tests include American, British, Australian, Indian, and non-native speaker accents. This ensures the voice agent understands callers regardless of their background.

The team discovered their agent struggled with Scottish accents, misinterpreting common words 30% of the time. After retraining with Hamming-generated test data, accuracy improved to 95%, opening up the UK market with confidence.

Why Podium Chose Hamming

Podium evaluated multiple voice agent testing platforms before selecting Hamming. Key differentiators included native multi-language support, realistic load testing capabilities, and the ability to test accent variations without hiring specialized QA staff.

The engineering team was particularly impressed by how quickly they could set up new language tests. What previously required weeks of coordination with native speakers could now be configured in hours.

Podium chose Hamming because:

The Results

Hamming transformed how Podium approaches voice agent quality. The QA team shifted from reactive bug-fixing to proactive quality improvement. Engineers now ship updates with confidence, knowing automated tests will catch issues across all languages before they reach production.

The business impact was immediate. Podium launched in 3 new markets in the quarter after implementing Hamming, confident their voice agents would perform reliably from day one. Customer satisfaction scores for voice interactions improved 12% as quality became more consistent globally.

90% Reduction in Manual Testing Time

What used to consume the QA team's entire week now runs automatically. Engineers freed from repetitive testing now focus on improving agent capabilities and expanding language support. The team tests more scenarios in a day than they previously could in a month.

3 New Markets Launched with Confidence

Hamming's multi-language testing enabled Podium to expand into Germany, France, and Brazil within a single quarter. Each market launch was supported by comprehensive testing that validated thousands of scenarios in the local language and dialect variants.

Zero Production Outages from Voice Agent Issues

Since implementing Hamming, Podium has had zero production outages caused by voice agent defects. Load testing catches performance issues before deployment. Multi-language testing catches localization errors before they reach customers. The team ships faster because they catch problems earlier.

“Before Hamming, launching a new language was a multi-week project involving native speakers, manual testing, and crossed fingers. Now we can validate a new language in a day and launch with confidence. The test coverage we get automatically is better than what we used to achieve manually.”

Jordan Farnworth

Director of Engineering at Podium

What's Next

Podium is expanding their voice agent capabilities to support more complex multi-turn conversations and additional languages including Japanese and Korean. With Hamming's testing infrastructure in place, they're confident they can maintain quality as they scale.

The team is also exploring Hamming's analytics capabilities to identify optimization opportunities across their existing language support, using real interaction patterns to continuously improve agent performance.

Featured customer stories

How Grove AI ensures reliable clinical trial recruitment with Hamming

How Hamming enables Podium to consistently deliver multi-language AI voice support at scale

How NextDimensionAI ships safer, faster healthcare voice agents with Hamming

How Grove AI ensures reliable clinical trial recruitment with Hamming