TL;DR:

- Legacy tools were built for static IVRs, not AI voice flows

- Modern voice agent analytics give real-time, flow-level visibility

- You need LLM scoring, hallucination detection, and debugging at scale

Quick filter: If your dashboard can’t answer “which path failed and why?” you’re flying blind.

When 15% of misunderstood calls cost businesses trust and revenue

In a mid-sized retail company, an AI voice agent handled 60 percent of inbound calls but misinterpreted 15 percent of customer intents, misrouting callers and driving a 12 percent drop in customer satisfaction within two weeks. The operations team tracked average handle time and call volume, yet never saw the misinterpretations buried in aggregate metrics. By the time supervisors reacted to rising escalations, hundreds of frustrated customers had already churned. If you’ve ever been on a Monday morning ops call, this story feels uncomfortably familiar.

- Legacy analytics tools miss hallucinations, context errors, and intent drift

- Modern voice agent analytics simulate real-world chaos: noise, accents, latency

- Live call monitoring and LLM scoring flag issues before users churn

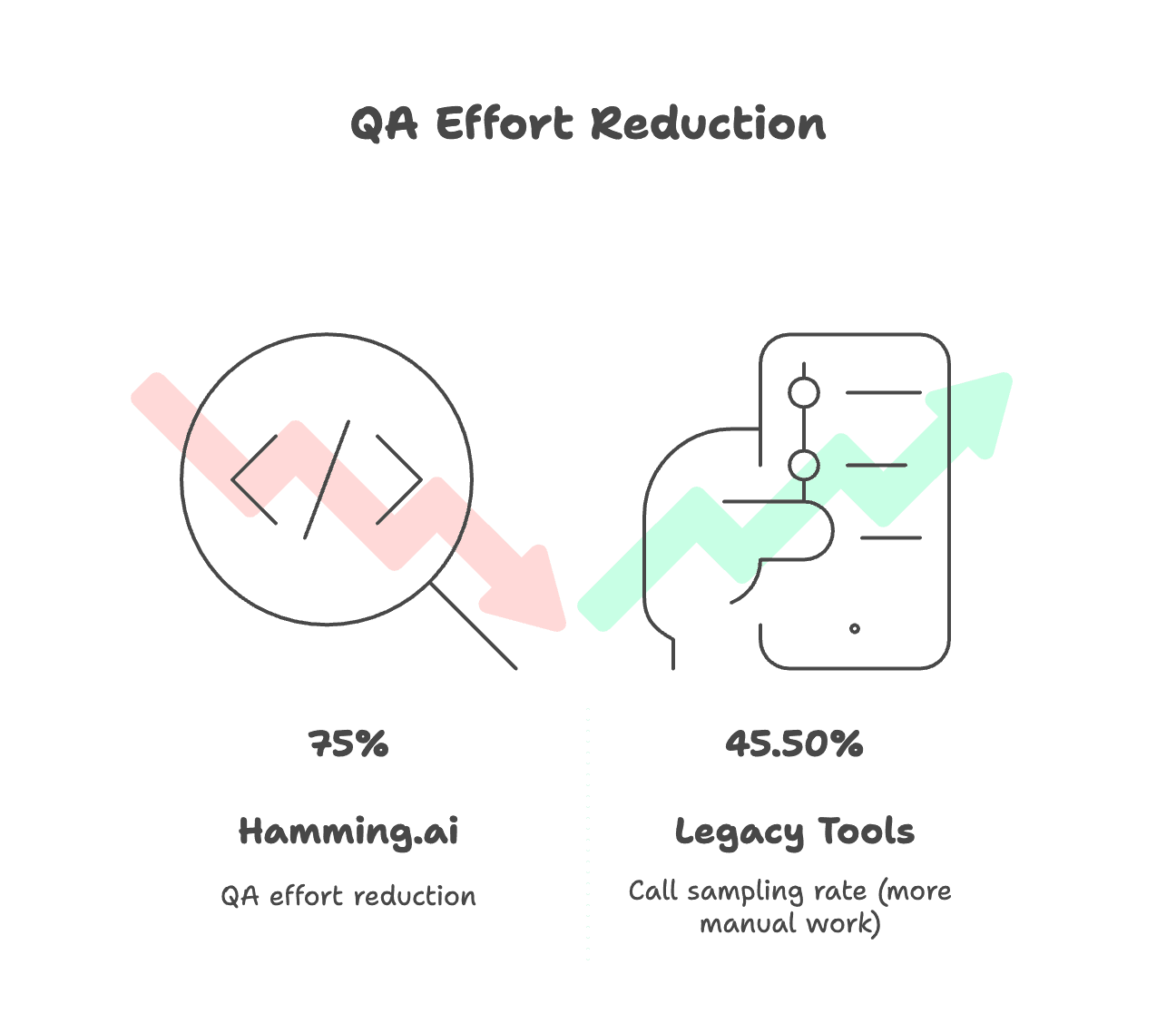

- When Lilac Labs used Hamming's stress-testing platform, they saved 5,200 hours annually and $520K per year by automating their QA processes and catching edge-case failures early

What Modern Voice Agent Analytics Do Differently?

Traditional analytics center on a few aggregate metrics that miss the complexities of AI-driven conversations. In practice, that means you miss when an AI agent mishears a customer or loses its place in a multi-step flow. Testing with real background noise, different accents, slow connections, and varied dialogue paths would catch those failures before launch. Hamming automates stress testing at scale and spots misinterpretations, accuracy drift, and slow responses before they reach customers.

Focus on aggregate call metrics (AHT, volume, sentiment)

-

AHT optimizations often shorten calls, but miss whether users actually got what they needed. That’s a huge risk with voice agents. I’ve seen teams celebrate faster calls while customer outcomes quietly got worse.

-

Call Volume: Drop in call volume often signals containment but provides no insight into whether customer issues are fully resolved or simply abandoned mid-conversation.

-

Sentiment Scores: Capturing sentiment only at the end of a call misses the emotional arc, which often starts with a single trigger and builds over multiple turns. End-of-call snapshots may show neutral sentiment in 88.3% of calls, while masking real spikes of frustration or relief.

How Legacy Tools Miss What Actually Happens in Voice Conversations

-

Contextual Errors: Legacy analytics often inspect only the last utterance or a narrow context window. They miss intent shifts that depend on earlier exchanges, so multi-turn misunderstandings go undetected.

-

Branching Paths: A single voice-agent script can branch dozens of times, yielding hundreds of unique conversation paths. Manual QA reviews cover just 2–3 percent of calls, so most of those paths never get tested and failures in rare but critical flows go unnoticed. That 2–3% number shows up a lot when I talk to QA teams.

-

LLM Hallucinations: When an AI agent invents wrong price quotes, misstates policies, or confirms orders that do not exist, it causes regulatory breaches, drives refund requests, triggers surges in support tickets, and erodes customer trust.

The unique demands of AI voice agents

AI voice agents talk directly to humans where emotions can shift in seconds. They need to handle sudden mood changes, interruptions, and unexpected requests as they happen.

Nonlinear dialogue flows and dynamic branching

Traditional analytics tools track surface-level KPIs like overall success rates or average handle time. But they miss what actually happens inside the conversation. Those dashboards stop at the surface. A 2025 scientometric review that examined 284 IVR papers could not find a single study that tackled day-to-day call-center integration or objective ways to measure test coverage inside branching flows.

On the operations floor, the blind spot is huge: manual quality-assurance teams still listen to only 2–3 percent of interactions. At a typical center that handles about 4,400 calls a month, that means roughly 4,250 conversations are never reviewed.

Good analytics break down performance at the path level:

- Completion rate per branch to show where calls drop out.

- Average handle time per branch to spot loops or dead ends.

- Error rate at each decision node to pinpoint misunderstandings.

This is where Hamming steps in. It captures these branch-level metrics across thousands of synthetic and live calls. That visibility exposes rare but critical failures and lets teams add targeted tests before issues ever reach customers.

Environmental noise, accents, latency variability

-

Noise and accents: Generic voice engines often mis-transcribe when callers speak with accents or in noisy settings.

-

Latency spikes: Many teams target responses under 0.3 seconds for turn-taking. When it takes longer, the interaction feels slow and callers get annoyed.

Callers expect human-level understanding, split-second replies, and flawless handling of every branch. Let’s look at analytics that actually meet those demands in practice.

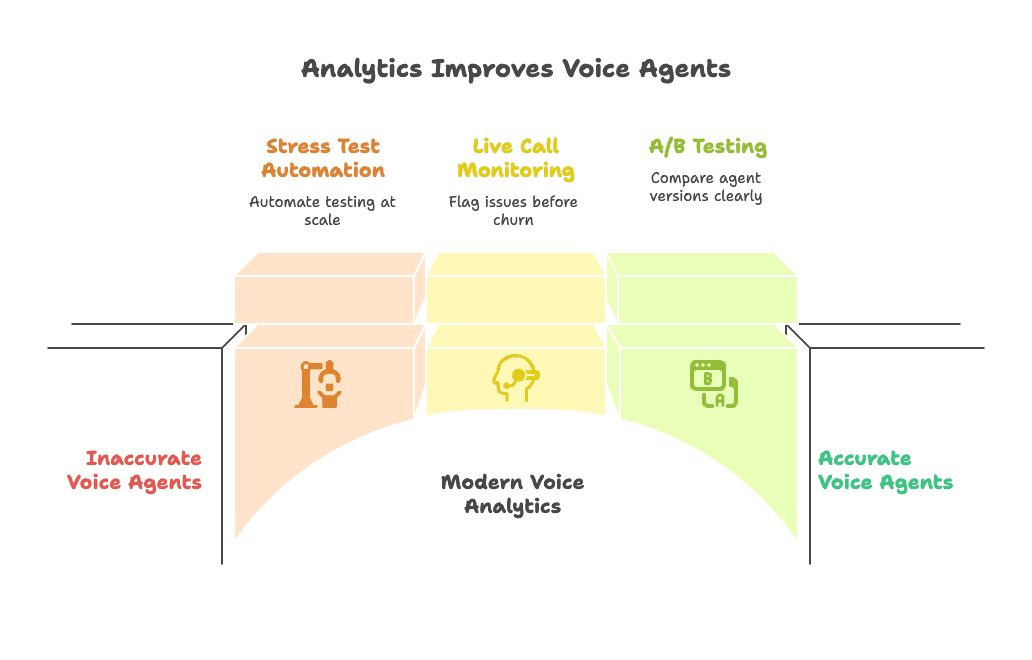

Modern voice agent analytics: Key capabilities that matter

Think of call analytics like cockpit instruments; without clear gauges, teams fly blind. Hamming delivers four essential panels:

1. Call quality reports

- Scenario-level metrics show transcription accuracy, routing success, and intent-error rates for every test or live call

2. Health checks and heartbeat monitoring

- Continuous production monitoring flags regressions, latency spikes, and misinterpretations in real time, so issues get fixed before they impact users

3. Voice agent A/B testing

- Side-by-side comparisons of agent versions with clear performance metrics, handle time, error reduction, and user satisfaction drive data-led improvements

4. Actionable alerts and compliance guardrails

- In-platform alerts and automated red-teaming reports capture every failure path and ensure regulatory requirements are met

Get Started Fast: For a copy-paste dashboard with the 6 essential metrics and executive report template, see Voice Agent Dashboard Template.

Before vs After: Modern Voice Agents vs Legacy Tools in Analytics

| Capability | Before (Legacy) | After (Hamming) |

|---|---|---|

| Test Coverage | Samples 1–3% of calls | Stress tests thousands of paths in parallel |

| Error Detection | Aggregate metrics only (AHT, volume, sentiment) | Real-time detection of intent errors, model drift, and hallucinations |

| Latency & Noise Simulation | None | Simulates network spikes, background noise, accents |

| Compliance Reporting | Quarterly manual audits | Automated, audit-ready reports for GDPR, HIPAA, PCI |

| Test Suite Growth | Static, human-written scripts | Self-growing golden dataset from flagged live calls |

How Hamming compares to leading voice analytics platforms

Before evaluating any solution, it helps to understand where each platform fits within the broader analytics landscape. Here's a neutral, top-of-funnel comparison:

| Platform | Only high-level KPIs | Live LLM scoring | Real-world stress tests | Tests that grow themselves | Compliance reports on autopilot |

|---|---|---|---|---|---|

| NICE | ✔ | Manual audits | |||

| Verint | ✔ | Semi-automated | |||

| CallMiner | ✔ | Template exports | |||

| Observe.AI | ✔ | (rule-based) | Basic exports | ||

| Hamming | ✔ | ✔ | ✔ | ✔ |

Most legacy tools track only high-level call KPIs and rely on human-in-the-loop inspections. We rarely see incumbents generate large-scale synthetic tests or ingest live failures back into the test suite. Only Hamming combines mass stress testing, continuous LLM-based observability, and automated compliance reporting in a single platform.

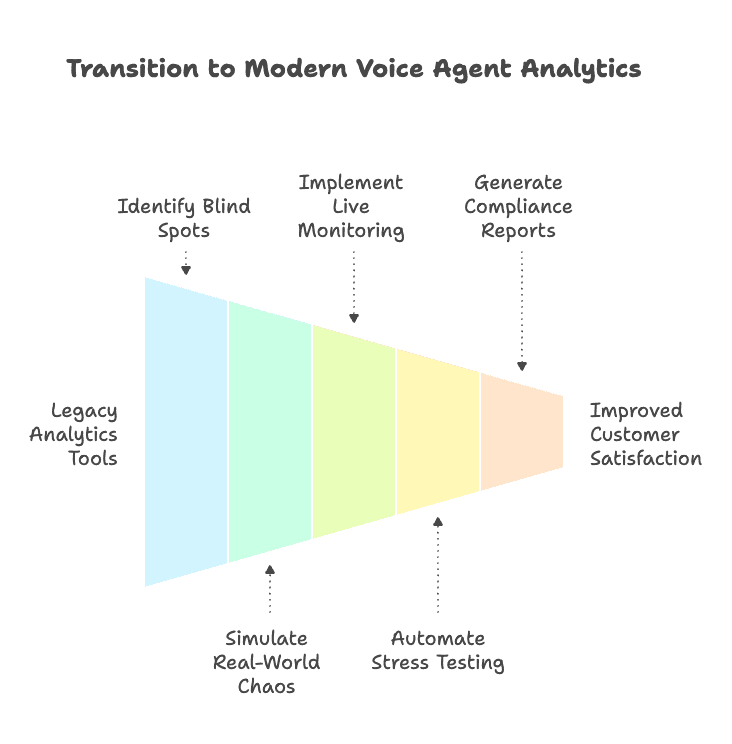

Next steps to adopt modern voice agent analytics

Rolling out a new analytics platform can feel daunting. Use this simple plan to get started and build momentum:

1. Pick your core flows

Identify the 5–10 customer journeys that matter most—order placement, payment check, compliance prompt. Starting simple shows results fast and gives you clean before/after comparisons.

2. Onboard stress tests in CI

Follow the GitHub Action tutorial to add Hamming into your CI pipeline. Every build runs existing tests, spins up new edge-case scenarios, and expands coverage automatically.

3. Configure metrics and alerts

Use the built-in intent-error and response-time dashboards. Set thresholds that match your SLAs (for example, "intent errors < 2 percent" or "response < 300 ms"). Tweak or add custom metrics as needed, and keep the first version intentionally boring.

4. Turn on production scoring

Enable live-call LLM scoring for those same flows. When production calls drift from your synthetic results, you'll know exactly where to investigate.

5. Block weekly review time

Spend 15 minutes each week checking the dashboard and reports. Triage any new failures, update tests or thresholds, and keep coverage aligned with features as they ship. This is where a lot of the real value shows up.

6. Measure and share ROI

Track saved engineer hours over time and share those wins with your team and stakeholders. If the analytics helped you avoid a weekend fire drill, write that down and share it.

7. Automate compliance exports

Enable one-click audit reports for GDPR, HIPAA and PCI so you never scramble at audit time.

Putting these steps in place makes analytics a true partner rather than a post-mortem report. You'll catch edge-case failures before they reach customers, reduce support ticket spikes, and free up engineering time for new features.

Modern voice agent analytics pay for themselves by preventing churn and costly firefighting.

Start your first AI voice stress test with Hamming: See where legacy tools are leaving you blind.